Aditya Rasam

I am working as an Computer Vision Scientist at Alarm.com Inc. focused on SLAM (Simultaneous Localization and Mapping) for designing autonomous drones. I graduated from NC State University, with an immense passion for developing intelligent/autonomous systems that perceive the environment using vision and non-vision sensors for decision making. At NC State, I studied Computer Vision, SLAM and embedded systems to enhance my skills to develop autonomous robotic systems. Further, I have worked on various course projects at NC State University which have helped me understand the intricacies of such concepts. My learning at NC State was further bolstered by an internship opportunity with a research group at SIEMENS corporate technology, Princeton, NJ, where I worked on smart manufacturing systems, demonstrated using an Autonomous Gantry Robot. Following my graduation, I joined Alarm.com Inc. as an Associate Computer Vision Scientist, where I am acquiring practical experience on the SLAM aspect of autonomous aerial navigation of drones.

Education

North Carolina State University

GPA: 3.87

Graduated May 2018

University of Mumbai

GPA: 4.0

Skills & Courses

- C++, Python, embedded C

- Linux, Shell scripting, ROS, MATLAB

- CMake, Docker

- RGB-D SLAM, Visual-Inertial Odometry (EKF), ICP (Lidar array/ Time-Of_flight) odometry

- Deep learning, Convolutional Neural Networks, Fully Convolutional Network (VGG-16), Recurrent Neural Network, Long Short-term Memory

- Semantic Segmentation, Morphological transforms, Image filtering, 3D-Reconstruction, Edge detection, Histogram Equilization, Image Sharpening, Image Classification

- PyTorch, Keras, Theano, Tensorflow, OpenCV, CUDA

- Regression (Linear/Logical), Artifical Neural Network, K-Nearest Neighbor Classification, Principal Component Analysis

- Gaussian Model, Gaussian Mixture Model, t-distribution, Factor-space models

- numpy, scipy, scikit-learn, matplotlib, pandas

- Computer Vision, Autonomous Navigation with Computer Vision, Deep Learning, Digital Image Processing

- Machine Learning, Probabilistic Graphical Models

- Embedded System Design, Real-Time Control of Automated Manufacturing, Control of Mobile Robots

Work Experience

Senior Computer Vision Scientist

Associate Computer Vision Scientist

Research Intern

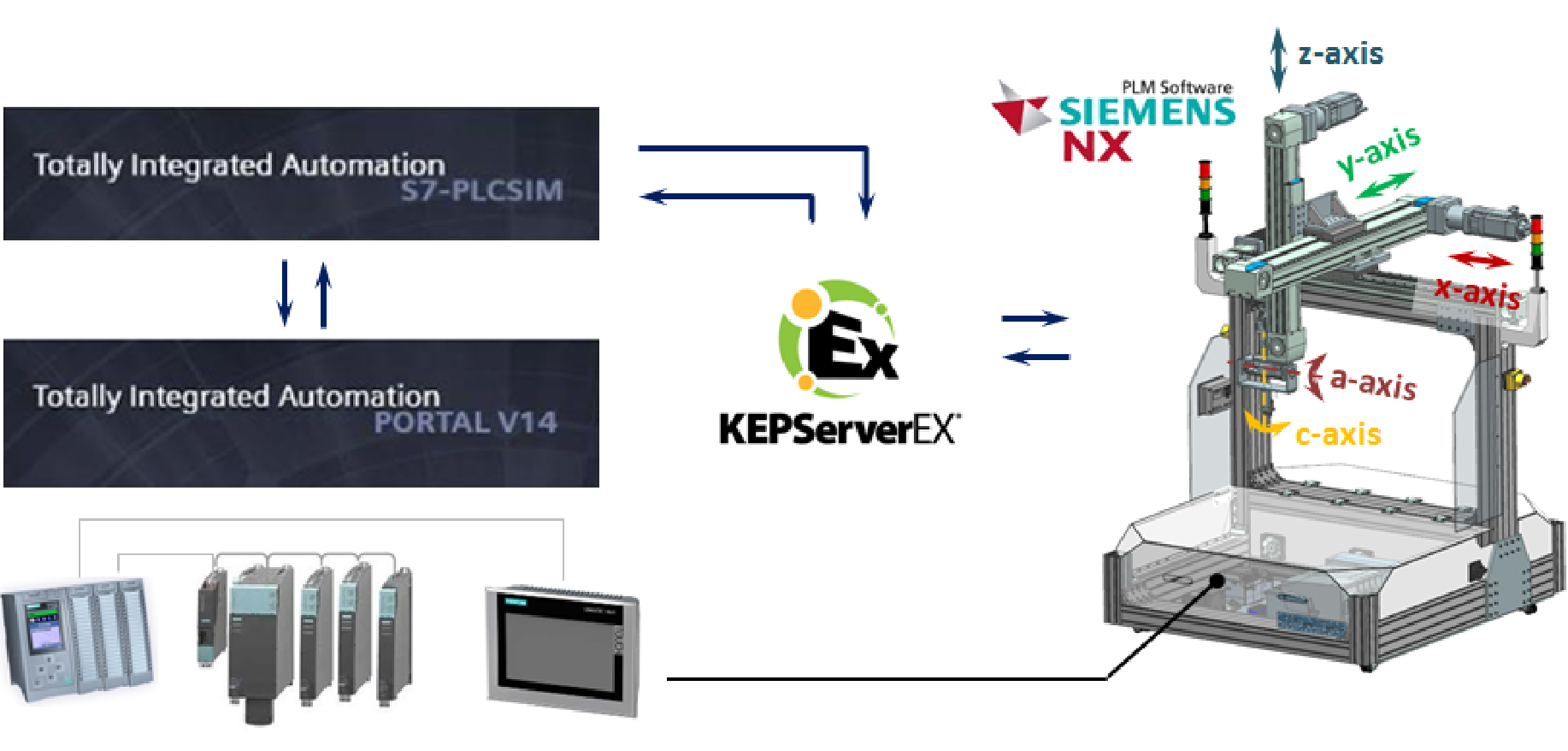

In the summer of 2017, I interened at SIEMENS corporate technology where I was part of a team that focused on the development and implemetation of a Gantry Robot that could behave autonomously and perform decision making tasks. My contribution was towards devloping program libraries to perform 6 DOF (Path Interpolation) motion using distributed servo drive systems. The implementation was also tested using the simulation functionality within SIEMENS NX, Mechatronics Concept Designer. For, this project we successfully published a paper at NAMRC - North American Manufacturing Research Council.

Executive Application Engineer

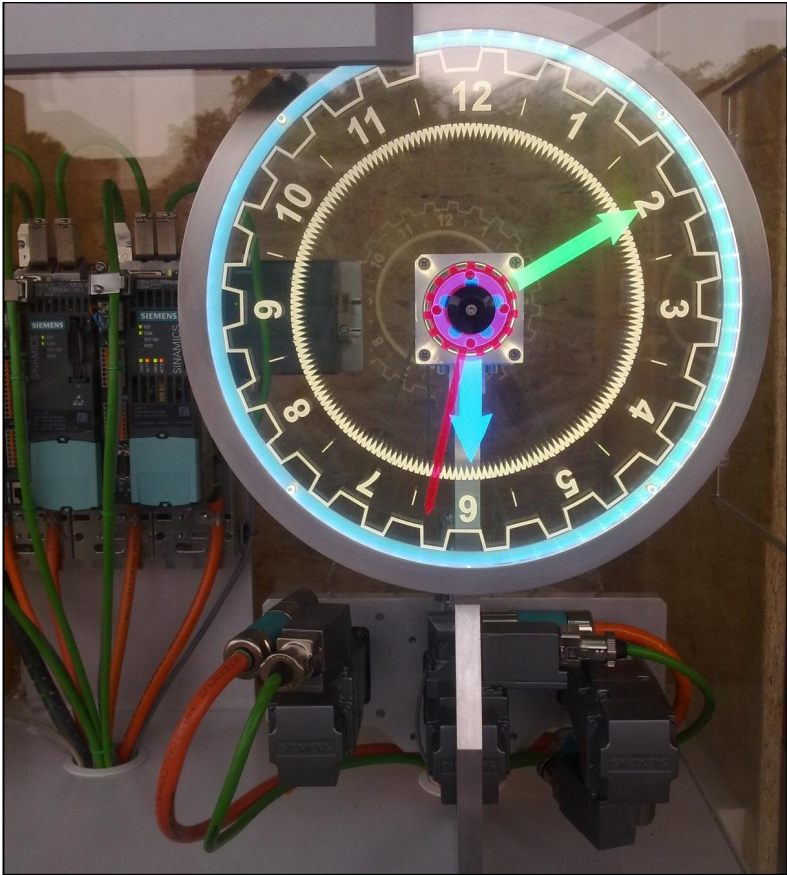

At SIEMENS, I was part of an application development team that worked towards prototyping cyber physical systems or mechatronics based solutions for high dynamic motion within production industry and material handling. My role was to study the kinematics of the system and accordingly perform research to select appropriate servo-drive system (that consists of an electrical drive, servo motors and a embedded/motion controller) and finally program the motion controller to execute the complex motion profile as demanded by the application. Brief highlight of my work at SIEMENS were development of independent but co-ordinated motion control (Position Synchronized) for 3 servo axis which I demonstrated using the three hands of a clock, development of EMS(Electric Monorail Systems) for car handling (developed for Mercedes), development of Robot-arm (Cartesian Kinematic) for Car-Chassis welding, development of high dynamic intermittent motion system for milling application etc.

Academic Projects

Design of a Robotic Computer Vision System for Autonomous Navigation

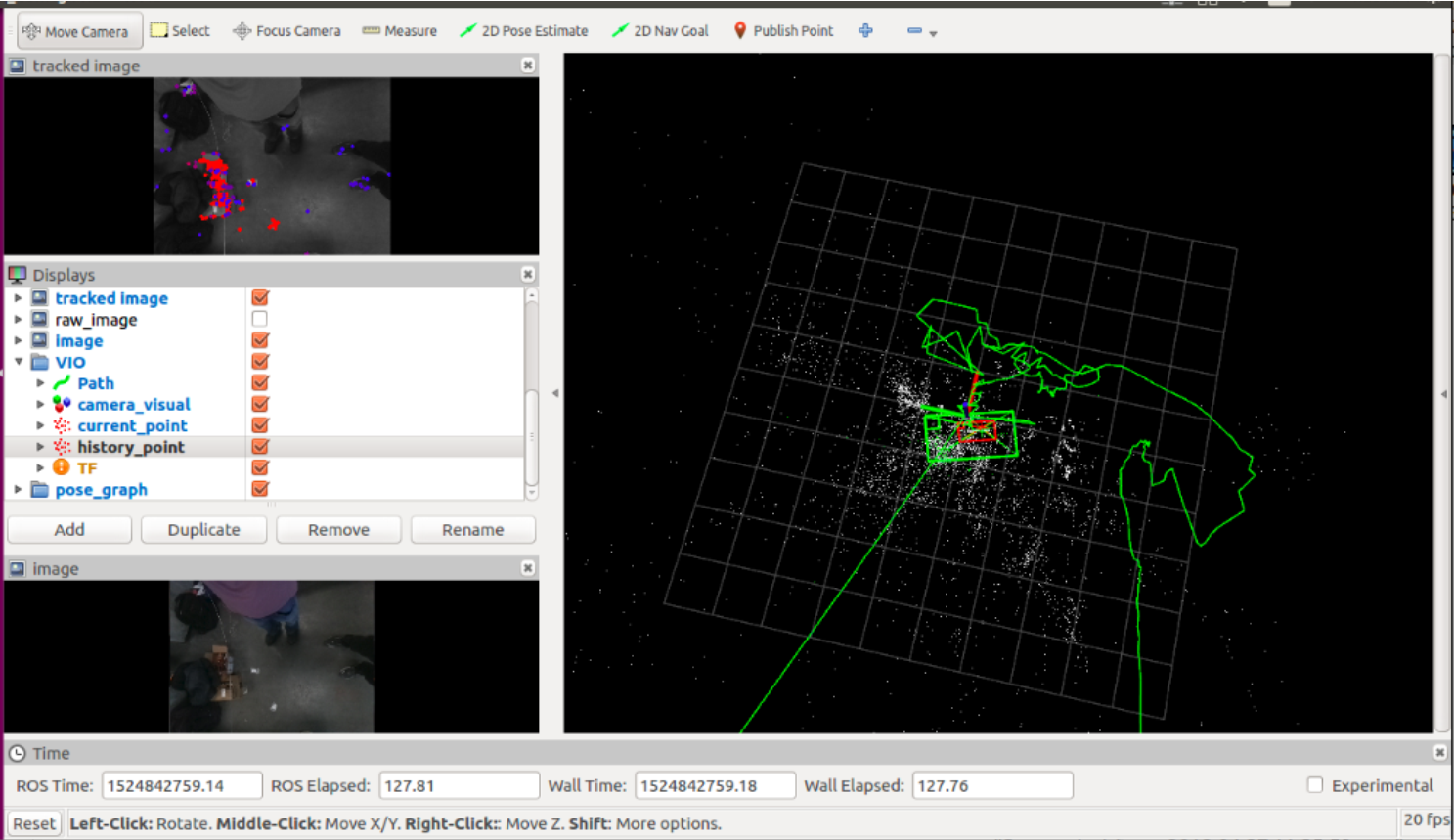

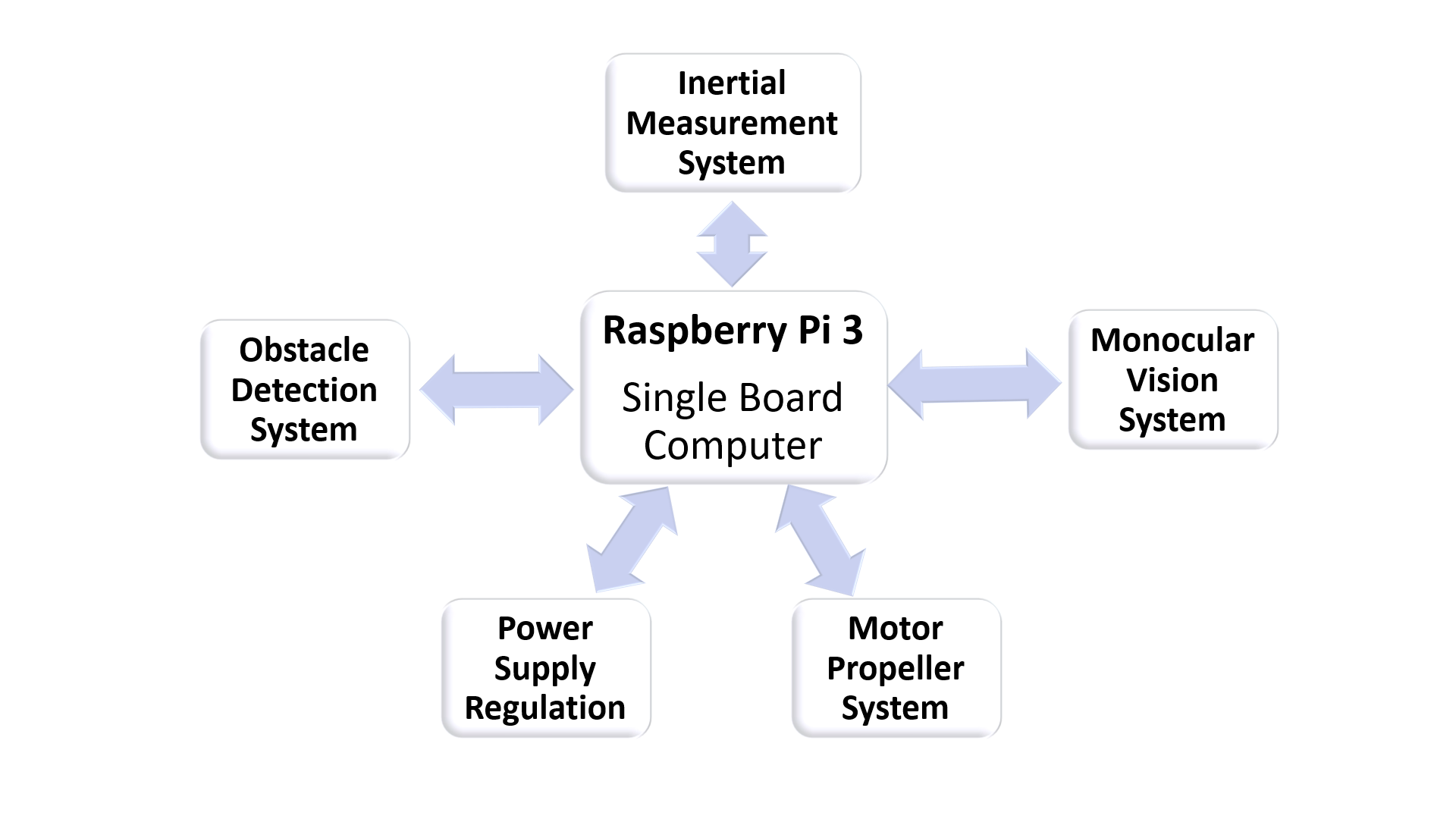

The goal of this project was to create two autonomous vehicles, one ground and other aerial to map and navigate certain environments, like construction sites, autonomously. The aerial vehicle which in our case happened to be an RC blimp enhanced the autonomous navigation task by providing an aerial view of the site to the ground vehicle (HUSKY) which earlier only relied on the stereo vision mounted on it. While performing this task the aerial blimp also had to autonomously navigate the environment based on the Visual-Inertial odometry data that it estimated from the Inertial and monocular vision sensors onboard. The development of the aerial blimp was segregated in three parts, Hardware interfacing and firware/driver development, SLAM and Context Awareness. I contributed by assisting the developemnt of the sensor interfacing/ firmware development and control algorithm development of the aerial blimp and implementation of Visual Intertial SLAM (VINS-MONO). The sensor firmware development and control algorithm were developed on a light weight yet powerful single board computers like Raspberry Pi 3 while we implemented complex algorithm like VINS-MONO slam on NVIDIA Jetson TX1. All the development was made using ROS package structure which allowed the Raspberry Pi and NVIDIA Jetson to share data over ros topics.

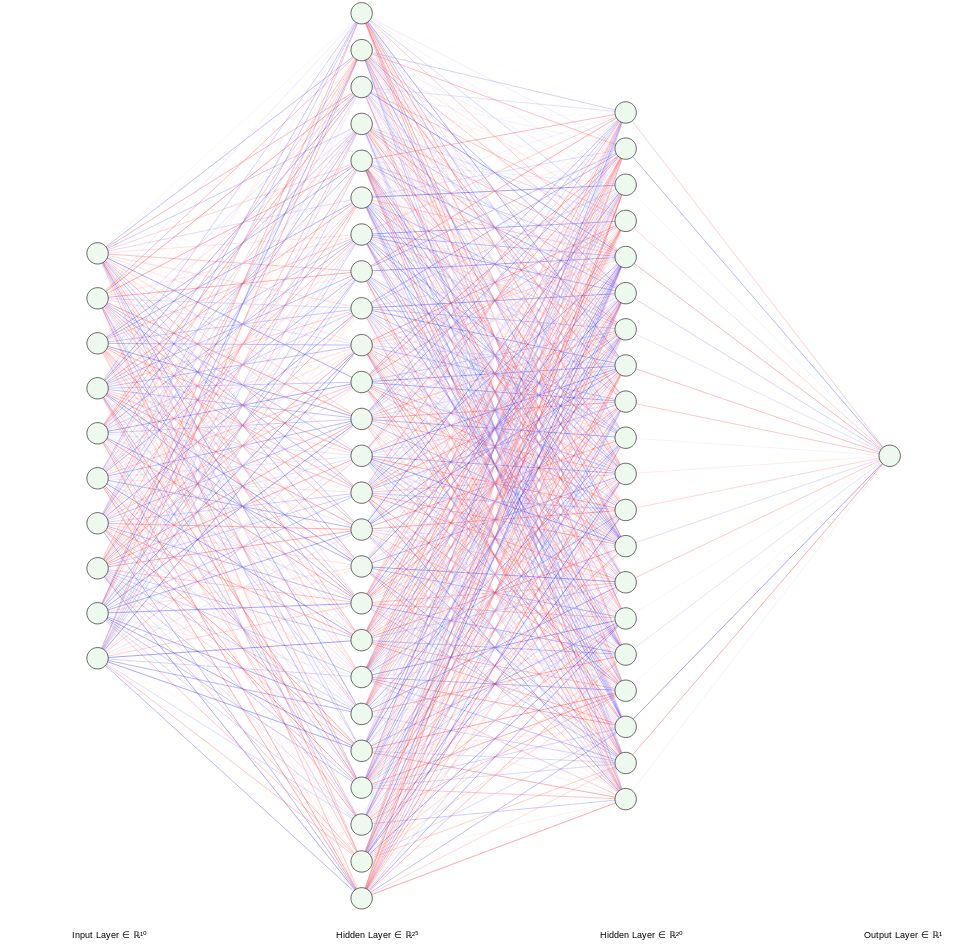

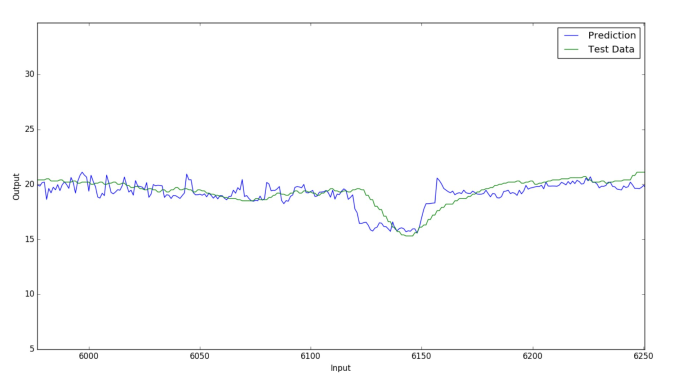

Respiratory Rate Estimation

This project aimed towards estimation of the respiratory rate of an individual using accelerometer data, heart rate and body temperature. For this project we implemented two model: First, using an Artificial Neural Network that does not take into account the temporal dynamic; Second, I used a KALMAN filter on top of ANN that basically acts as a filter to further improve the output. With this project I was succesfully able to estimate the respiratory rate with an RMSE of 2.98 in first case which improved to 2.12 in second case.

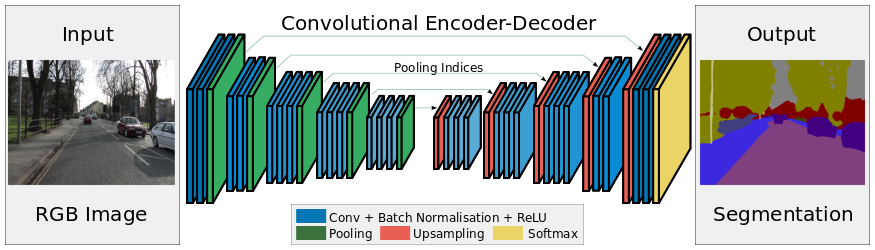

Semantic Segmentation with SegNet

In this project, I implemented a paper titled 'Sematic Segmentation with SegNet model' which focuses on a novel encoder decoder technique based on VGG-13 fully convolutional architecture. The task was to port the original code in CAFFE to Keras and test it with CamVid dataset. We succesfully implemented it with 91% accuracy.

Face/Non Face Image classification with Posterion probability estimation using Bayes rule.

The goal of this project was to perform classification given an image. The data was modelled into a likelihood function using models like Gaussian, Mixture Of Gaussian etc and then using Bayes rule I found out the Posterior probability that quatizes whether a given image is a face or non-face. The first image gives mean of the face dataset for modelling likelihood function using Gaussian Distribution while the second image represents the covariance of the face dataset. We used the AFLW (Annotated Facial Landmark in Wild) dataset.

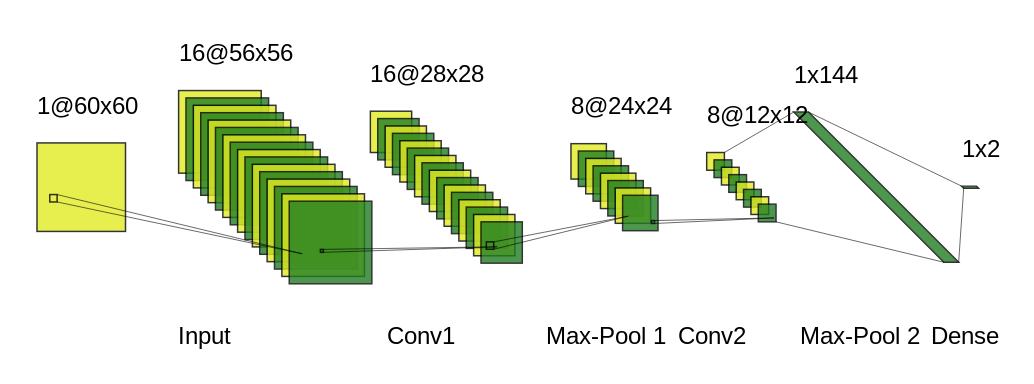

Face/Non Face Image classification using Deep learning architectures like CNN

The goal of this project was to perform classification given an image. We selected the AFLW dataset and implemented two architectures one using PyTorch and second using Tensorflow. The code was executed both on CPU as well as GPU for learning purpose and we were succesfully able to perform the classification with 92% accuracy.

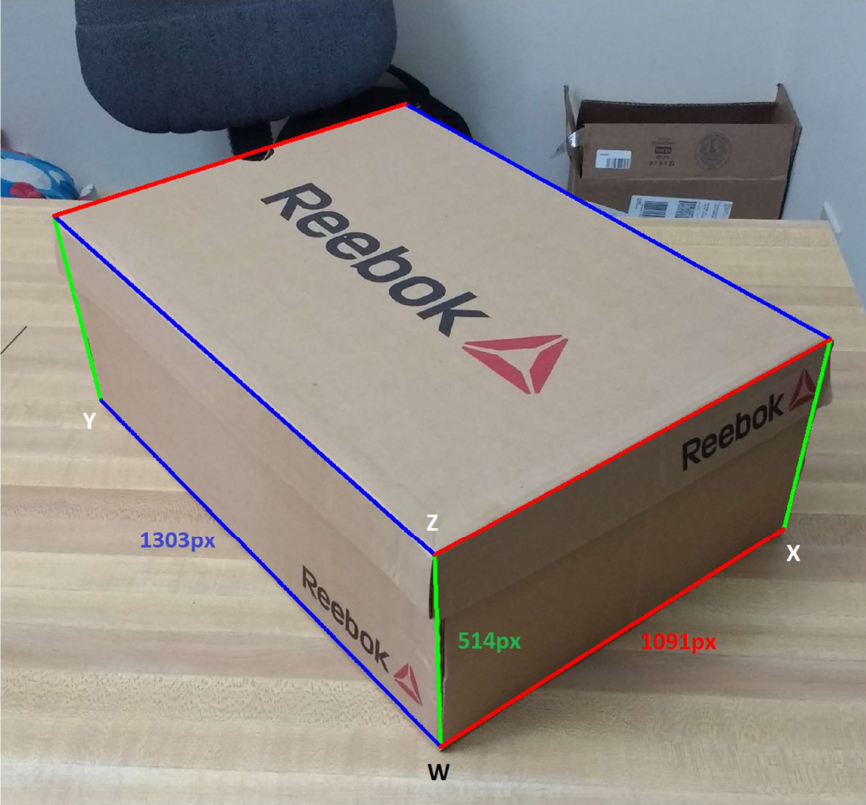

3D re-construction of an object given a 2D image using Homographic transformation

In this project, I implemented a script that given an image of a any box with 3 point perspective was able to construct a 3D model using the texture maps obtained from the homography matrices. The key concepts that were implemented within the scripts were Line Segment Detector, RANSAC algorithm Vanishing point estimation, Estimation of the Projection matrices, Estimation of Homography matrices, Calculating the texture maps and 3D stiching using a VRML file.

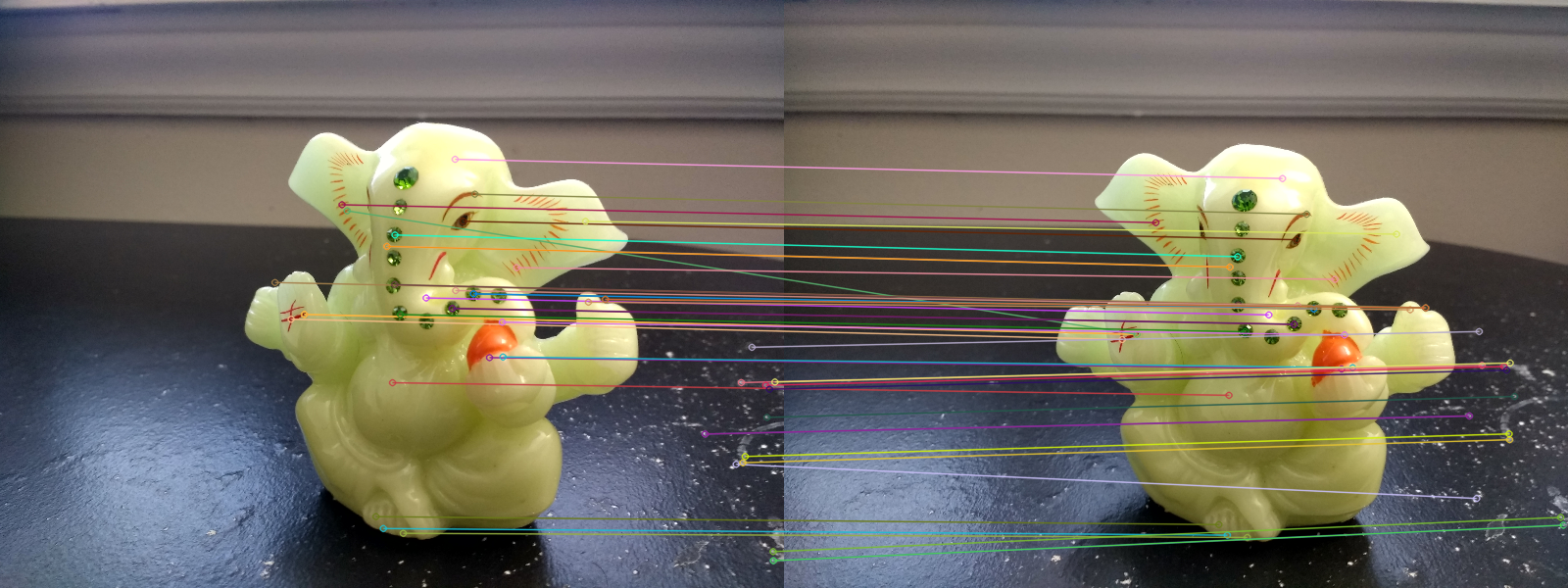

Feature detection and Matching

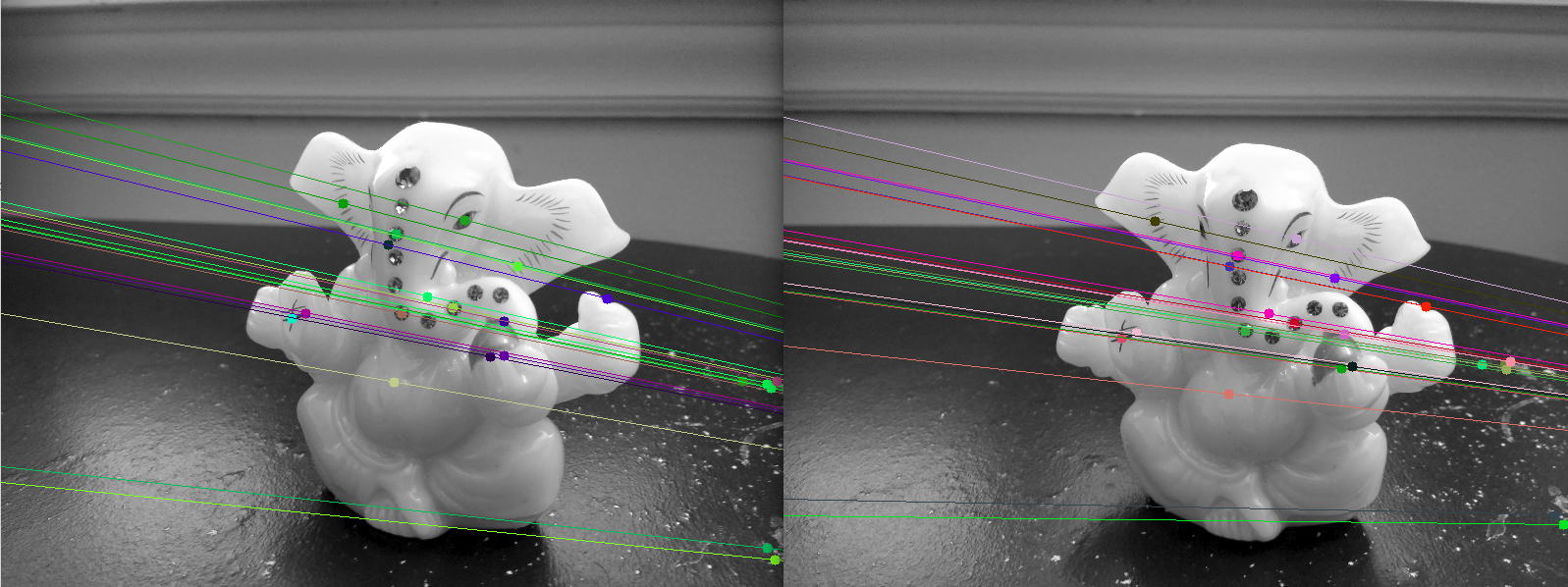

In this project, primarily, I implemented a script that given two images of an object in a scene taken from different location and orientation, first detects the SIFT features in the two images and then matches them in the two images. Secondly, with these features using the 8-point algorithm I calculated the Fundamental matrix. Using the fundamental matrix epipolar lines were estimated and drawn in the images as shown below.

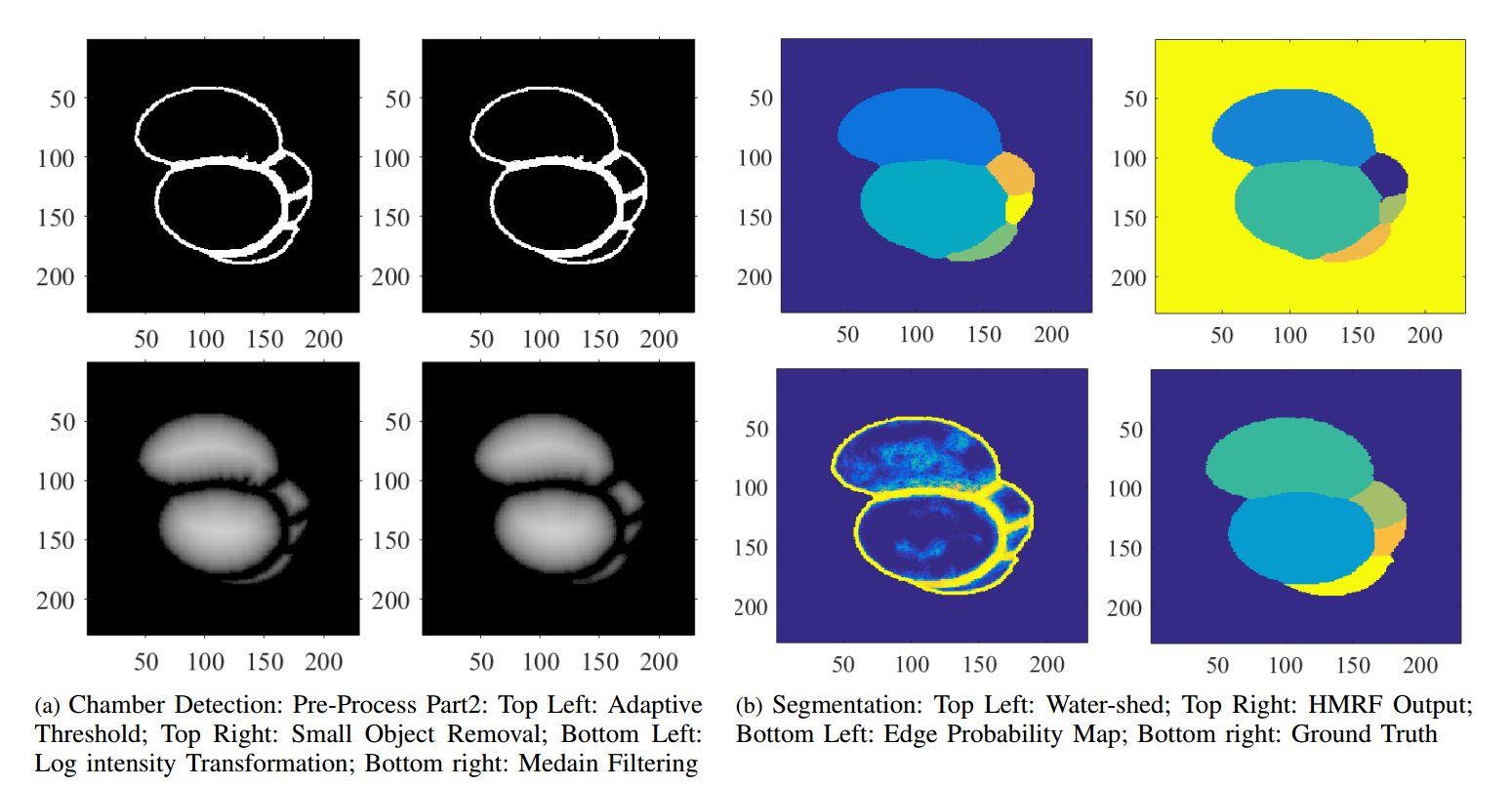

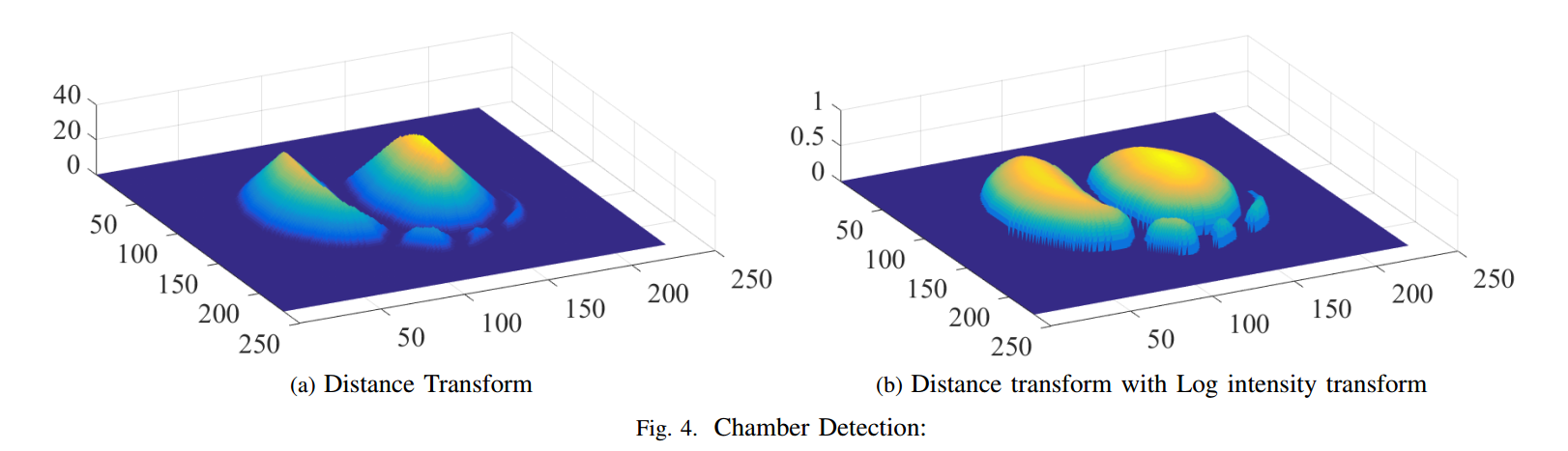

Foraminifera Image Segmentation

The goal was to classify marine biological species like Foraminifera using image processing and computer vision techniques given their edge probability maps. Foraminifera species is identified from its structure, specifically, from the number of chambers it possesses and its aperture. We used water-shed algorithm techniques to segment the chambers and aperture and succesfully segmented them to 81% of the test data.

Industrial Projects

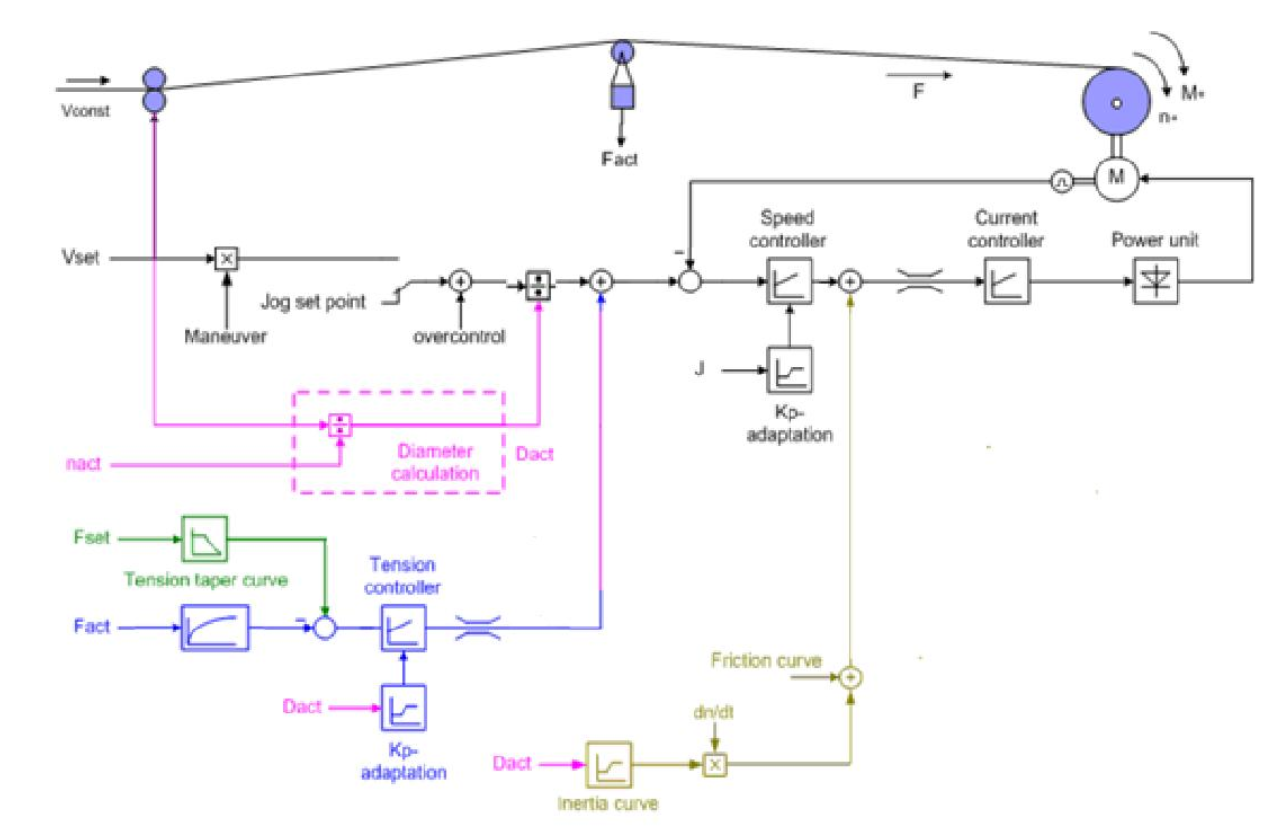

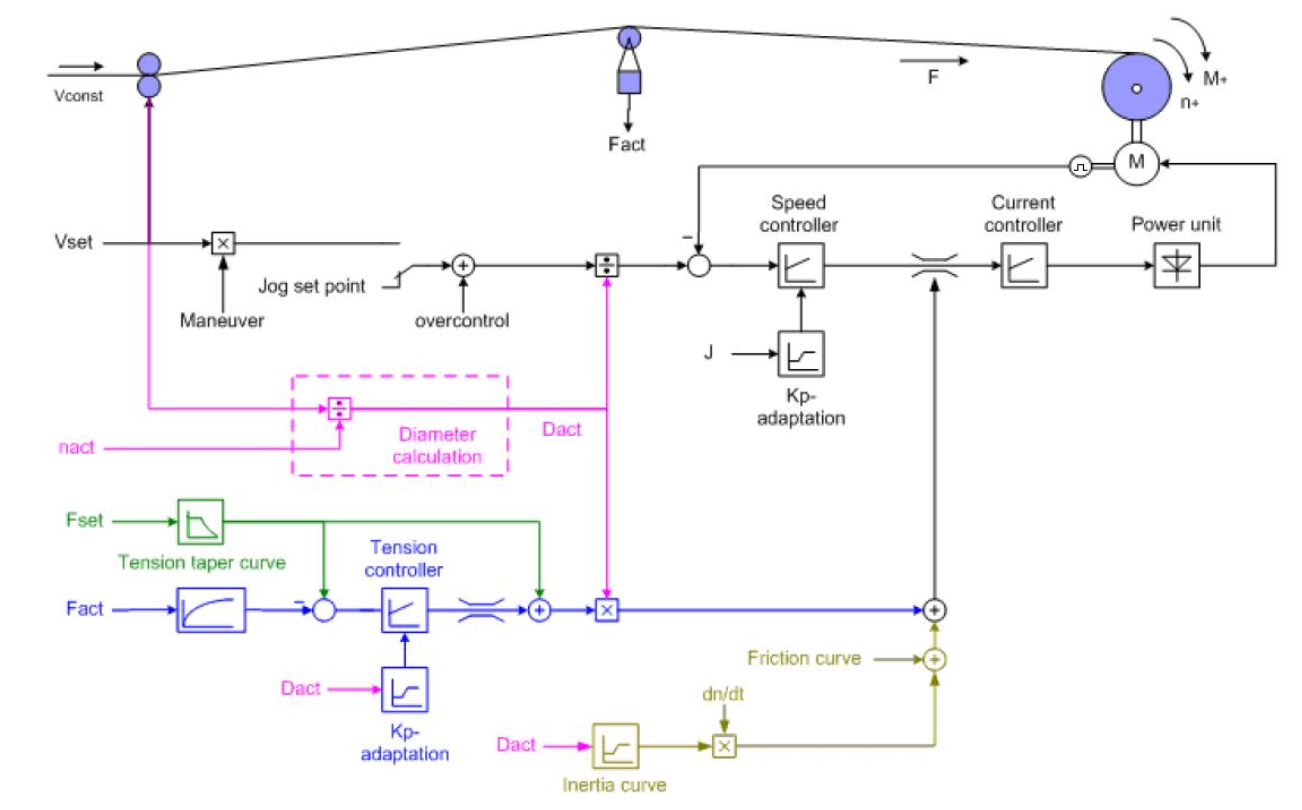

Applications: Converting applications, Winders

The Software library was developed using structure control language (embedded C) in SIEMENS Controllers. The development aimed towards easy use of the basic functions that allows the end user to implement the core functionality as demanded by the application with less possible overhead time. The functional blocks within the library are generalized to include most of the functionality but its an open-source library that allows the end user to modify the functionality as per the specifics of certain application. The library contains around 30 functional blocks that renders various mathematical as well as technological functionality for the motion control of converting applications. The function blocks can be combined to implement a control mechanism for motion control. Few example to list are the tension control using speed or torque control.

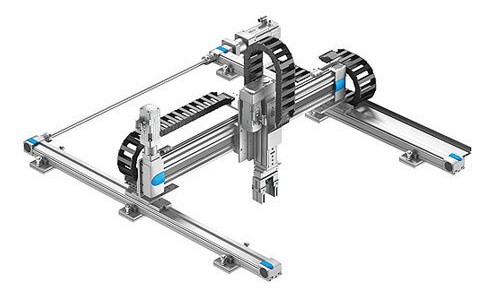

Applications: Ultrasonic Welding of Car-door Chasis using 3DOF Gantry Robot

The software development in SIEMENS controller aimed towards co-ordinated control for 3 linear servo motion axis using Path Interpolation technology that allows a 3 DOF for the end effector which carries the ultrasonic welding assembly. The positioning accuracy being in micrometers allowed to weld 48 points on the car-chassis entered through Human Machine Interface. The development was pilot tested at an OEM facility and demonstrated successful execution.

Applications: Material handling, Packaging applications

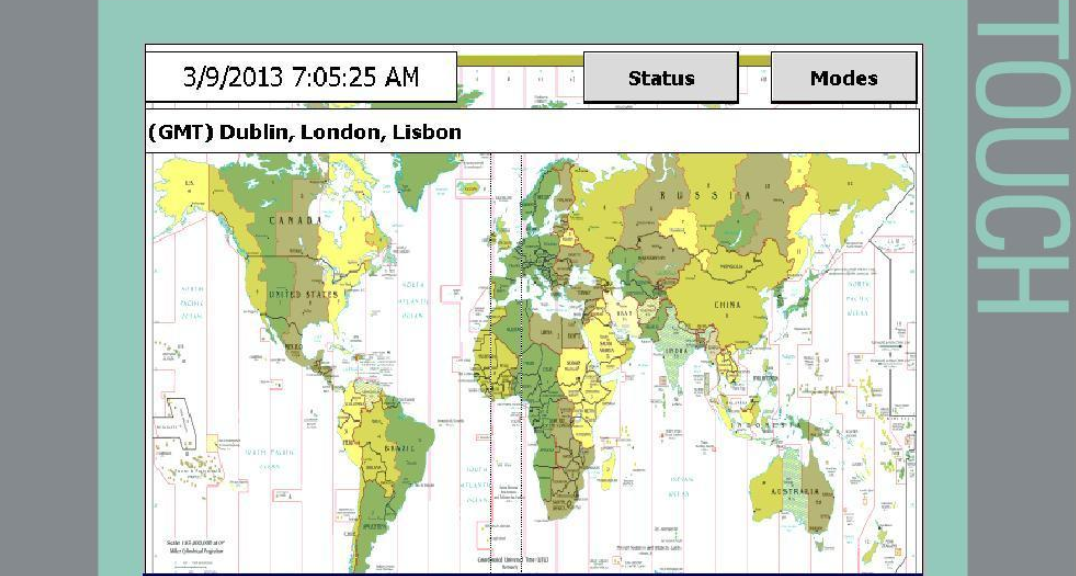

The software development in SIEMENS controller aimed towards controlling three servo axis in a Position synchronized manner. The developed library was implemented and successfully demonstrated on clock kit where the three hands of the clock formed the three axis of motion that were Position synchronized.

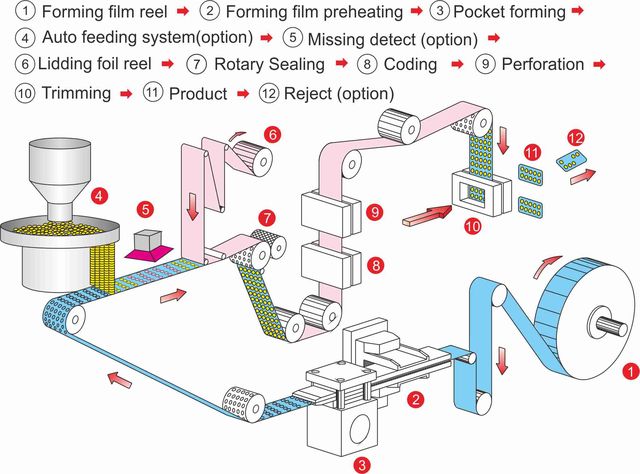

Applications: Packaging applications

The software development in SIEMENS controller aimed towards non-linear position synchronization between the film feeder and conveyor to get a pulling effect on the film for packaging. The goal was achieved using electronic-CAM technology which generates the same motion as with a mechanical cam-shaft. The development was pilot tested at an OEM that develops Blister packaging machines.

Publication

Temporal Logic (TL) Based Autonomy for Smart Manufacturing Systems

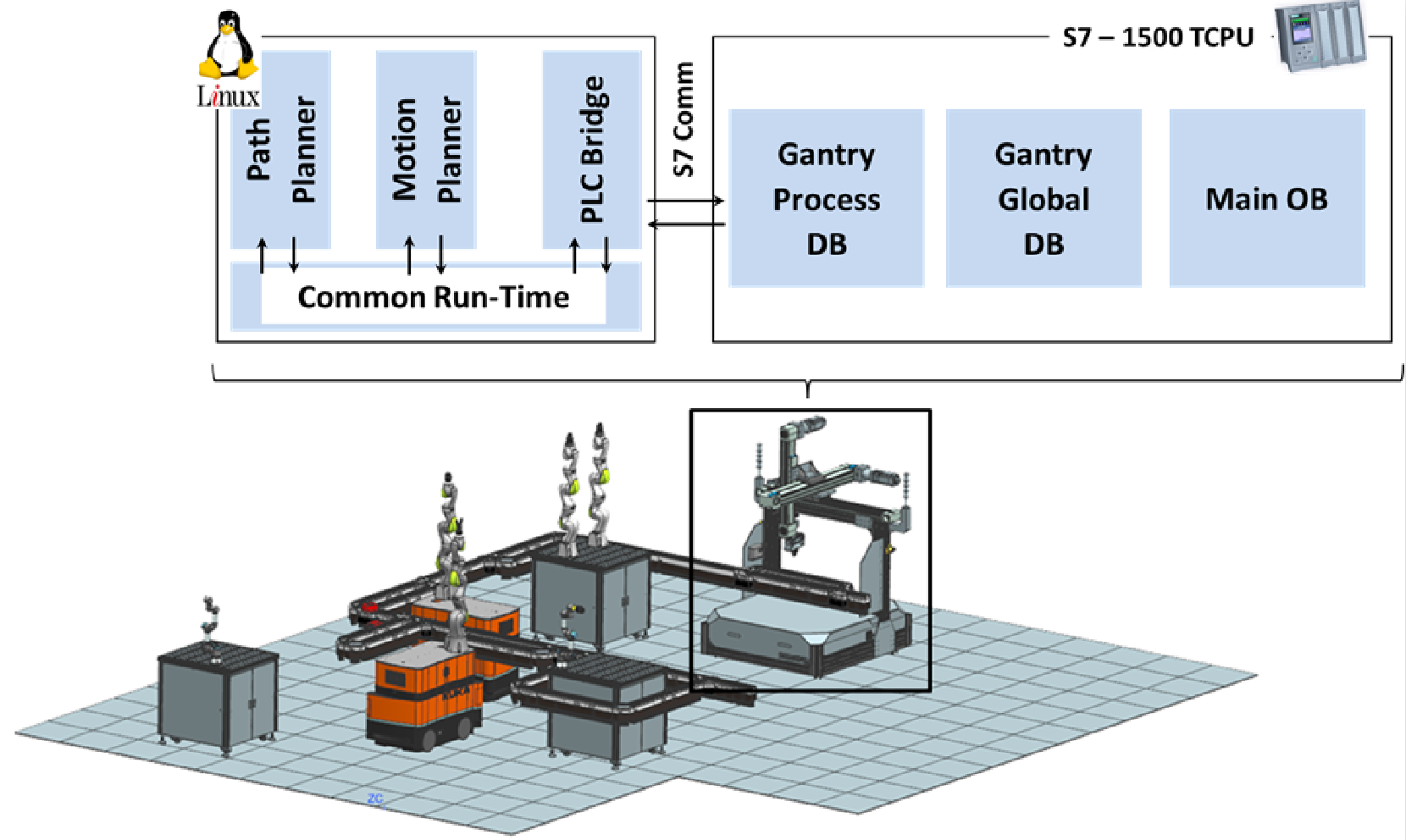

Smart-Manufacturing systems are increasingly being used to perform complex tasks on the factory floor. Most often, these systems have hard-coded cases to achieve a specific set of actions -or to assure the safety of the operations. The hard-coding makes the use complicated to re-deploy a system for different tasks. Therefore, it is necessary to have a flexible framework, which can generate a plan based on an intuitive description with system constraints, while satisfying all safety conditions. In this work, we propose Linear Temporal Logic (LTL)-based autonomy framework for smart-manufacturing systems. Specifically, we describe a general technique for formulating problems using LTL specifications. The use of LTL enables us to specify a manufacturing scenario (e.g. assembly), along with system constraints, as well as assured autonomy. Based on the given LTL formulation, a safe solution satisfying all constraints can be generated using a satisfiability solver. To eliminate the exhaustive and exponential nature of the solver, we reduced the exploration space with a divide and conquer approach in a receding horizon, which brings dramatic improvements in time and enables our solution for real-world applications. Our experimental evaluations indicated that our solution scales linearly as the problem complexity increases. We showcased the feasibility of our approach by integrating TLbased autonomy with the simulations of Gantry robot in Siemens NX Mechatronics Concept Designer and TIA Portal (PLCSIM Advanced) for Siemens S7-1500 TCPU connected to Sinamics drives